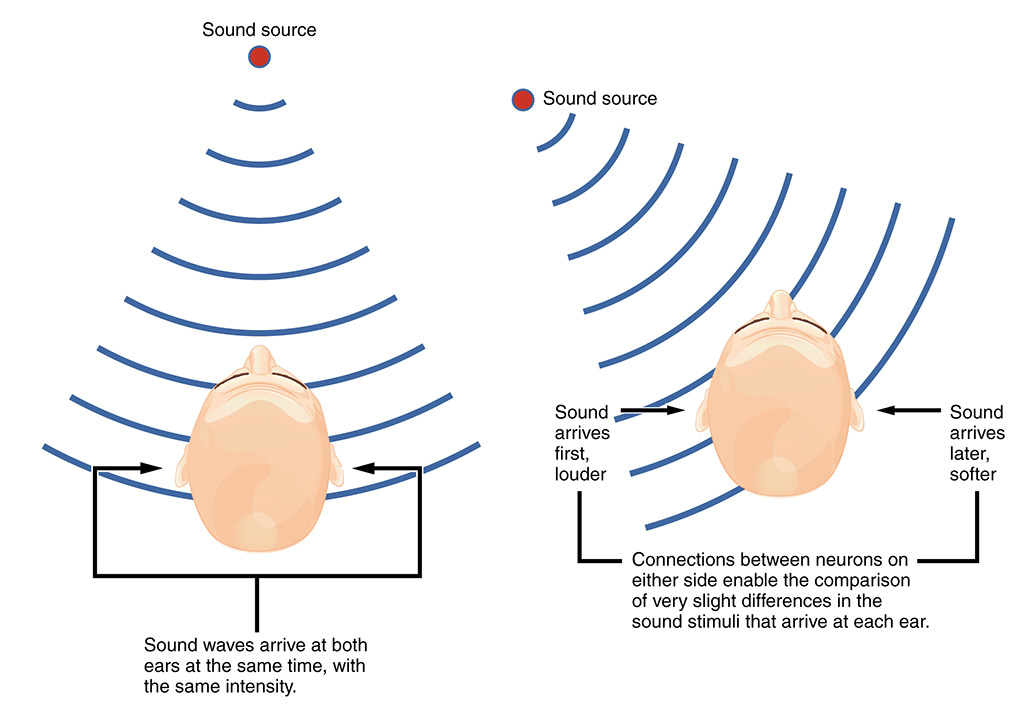

Sound is essential for immersive experience. So it makes sense that once you have 3d visuals you expect to hear sounds from the direction of the source and you expect them to change when you walk past them.

We wanted to use VR to host a contemporary music experience and to give composers new way to express themselves with a possibility to employ new sound topologies beyound the traditional orchestra arrangement. Thus the Trick the Ear — music in VR project was born.

We decided to implement all of this on the web. Users don’t have to download any app, web browser and headphones is only thing they need. Even better, it works on desktop, notebook and full fledged VR headsets. On a mobile you are somewhat limited but on that note later.

To download the code and resources head to the https://tricktheear.eu/download/

Whole application is deployed using A-Frame framework developed for VR and AR projects running directly in the web browser. All new major browsers support it as of 8.2020. VR on the web is an extremely fast developing field therefore new standards and changes are to be expected.

When searching for the best audio format we first considered and prototyped independent 3d sound sources linked to virtual objects using Web audio API. In A-frame it is super easy! You simply just add sound component to your 3d model:

sound="src: yoursong.mp3; autoplay: true"

…and you are done! Of course when you need to synchronize multiple sounds to start at once it get little bit more tricky.

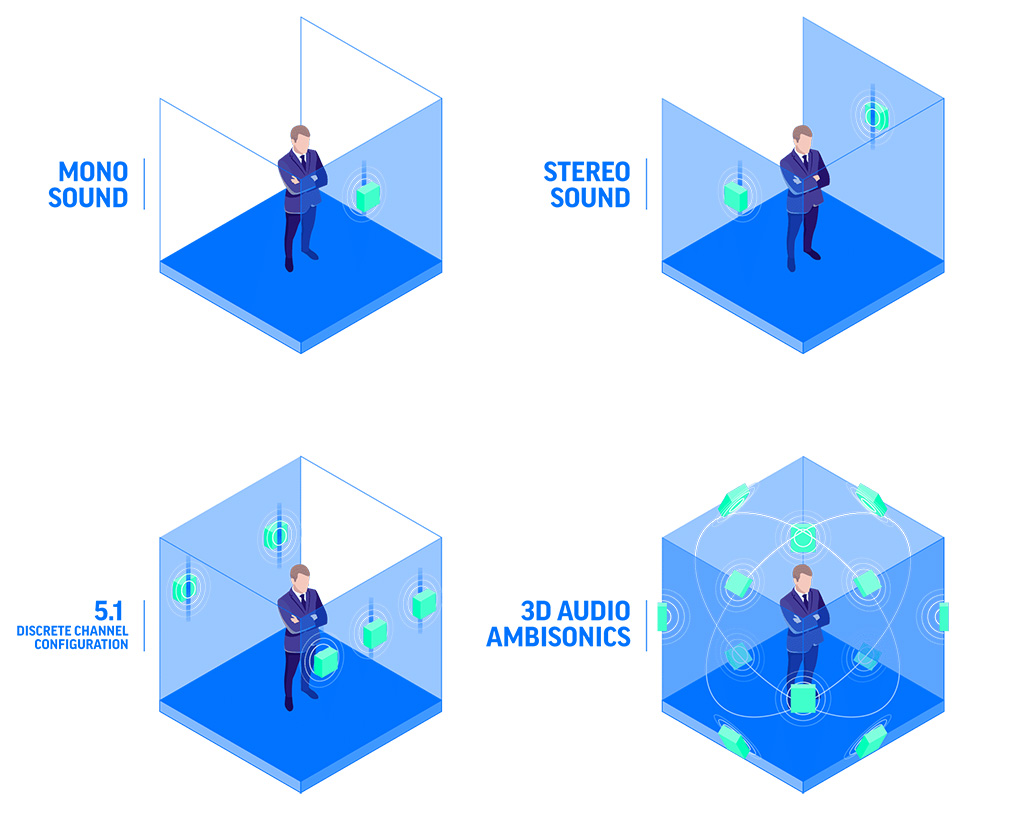

However we have encountered issues with staggering audio playback on mid-range priced smartphone devices and opted for ambisonics implementation instead for these devices. Ambisonics is a hybrid between audio format and audio engine. Important thing is that it enables to save the whole 360 sound sphere as one file and that A-frame supports it with ambisonic component. But since user can not walk around the sound but just rotate on the spot, you might even use simple 360 video uploaded to Youtube — it supports Ambisonic Ambix format now!

Using only one audio file that consists of all the sound sources have an advantage of innate synchronization between individual sound sources. As synchronization is crucial in music composition we concluded that this approach is best suited for such use-case.

One disadvantage is that producing ambisonics files mixed from individual tracks / instruments is less intuitive than using A-frame entities and it also requires additional software.

We have tested Adobe Premiere where it is entirely possible to produce ambisonics file from four individual mono tracks but it lacks fine control over directiveness of the sound sources and other characteristics important for the sound formation.

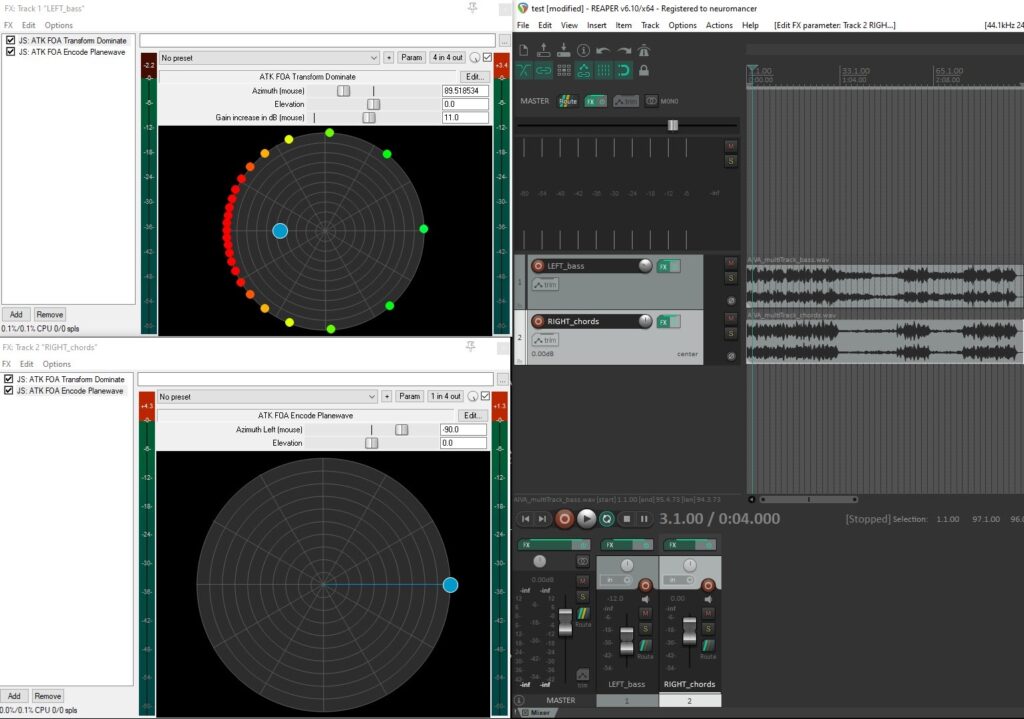

That is why we chose Reaper DAW software combined with free Ambisonic Toolkit. We should also note that more options for mixing ambisonic format exist – for example Waves software bundle or Ambisonic toolkit combined with Super Collider – open source music synthesis server. It is also possible to produce ambisonic in Audacity However Audacity currently lacks proper standard implementations. We expect more tools to become available in time for this task.

Specifically for the project we used ATK FOA Transform Dominate followed by ATK FOA Encode Planewave ( see docs ) available from Ambisonic Toolkit. This combination enables control over directivennes of each sound and its position in 3D space. You might also need to convert from FuMa ambisonics format to AmbiX supported by Adobe Premiere and Youtube – we used free Ambi convertor by NoiseMakers.

Using these tools we have effectively two setups for two sets of devices – one for mobiles where users can only rotate around and second for the rest of the devices like desktop and standalone headsets where users can rotate and also move freely.

Since in mobiles we are using the rotation only we can also omit the 3d models of the statues and substitute them with equirectangular sky maps. This approach further improves performance on mobile devices. Another alternative to deliver 360 content to mobiles is with youtube 360 video as it is wide spread, and in a sense, more unified platform than browsers supporting VR on mobiles.

Minor advantage of using A-frame framework over Youtube 360 video is that it uses less data because in video even static background has to be encoded over all frames.

Hopefully we helped you to navigate a bit in the wild seas of VR audio. Let us now what you think in the comments.